Unity 3D, Virtual/Augmented Reality & My Research

As a graduate student of theatre and drama in the ITS program at UW–Madison, I'm currently in the last stages of my coursework and preparations for my prelims. Due to my research interests in participatory performance utilizing augmented or virtual reality and video games, I've received permission from my area faculty to replace the written language proficiency requirement with something much more applicable to my subject matter: game design, and specifically game design with the Unity 3d engine.

Unity is useful to my research and eventual dissertation. The groundwork for my current efforts comes from my master's thesis, examining video games and their influence and application in participatory performance in general and theatre for youth in particular. Part of what I concluded in that thesis was that the culture around video games the technology used to design and build them, and their approaches to agency are influencing current and future audiences and practitioners of theatrical performance. The way audiences expect to interact with human and computer generated images in live performance are clearly connected to the genre of video games, and more importantly, the way that participatory performance is devised is also influenced by the types of agency expected from such an audience.

Unity is by far one of the most ubiquitous game development platforms, and so its influence on the methods and culture of video games and game development is significant. I believe that this influence is only likely to increase. Because I see video games influencing participatory performance, I believe Unity will have a strong influence in technology, technique and reception in the future of theatre and performance. As recent experiments like the UW Department of Theatre and Drama's ALICE project have shown, augmented reality has a place in traditional theatre spaces and can provide new techniques for theatrical performance. Unity was the tool used to create the environments in ALICE. Unity is also one of the leading engines for development of Virtual Reality experiences using a headset like the Occulus Rift, HTC Vive and Google's Cardboard. While VR, much like 3-D films, seems perpetually on the cusp of really taking off, I suspect that much of the truly innovative participatory performance work of the next decade will utilize VR to craft the setting and action of those works. Unity will likely be one of the dominant engines of those experiences. By gaining some proficiency in both the technical and design skills associated with the tool, I should have a more nuanced understanding of both the technical how and the rhetorical why of choices made by those creating a wide variety of games, performances, and experiences.

This proficiency is, I believe, comparable to the proficiency gained by those taking the language test for my program, and allows for a similar type of skill set. Those taking the language are not expected to be fluent speakers, but rather to have or gain a "readers’" understanding, so as to work with theatrical texts in their original language, rather than through translation. Similarly, I believe learning Unity will provide me with a "readers’" knowledge of a vital portion of my research subjects.

To prepare for this, as well as to justify this choice, this article is an introduction to Unity, an overview of VR and AR, and some of the applications of Unity most relevant to my future work, including my dissertation.

History & Ubiquity

In the early 2000s, three programmers from Copenhagen saw that their first game, Gooball, fail to get any success or attention. In addition the frustration of failure, they had suffered long hours, poor pay, and a competitive market. David Helgason, Nicholas Francis, and Joachim Ante realized that part of the problem was that everything associated with the development of a game--the engine itself, art assets, backend technology--was siloed within major studios. Each one had their own proprietary technology, meaning that for small start-ups like theirs, they had to reinvent the wheel on a shoestring--simulating physics, shadows, textures, and other features either required a brand new product, or expensive licensing of every single one of these aspects from a major company. As games became more sophisticated, this process became more difficult, took longer, and required significant capital to gather all the appropriate technologies into one game. The three decided that instead of attempting to make another game, they would address the problem itself. In 2004, they founded Unity Technologies, with the goals of developing a combined toolset for game development (one that provided all of the building blocks at a low cost) and that further development and innovations would then be shared back to the whole community using their product.

Unusually for a game company, the first version of Unity was presented in 2005 at Apple's Worldwide Developer's Conference, and worked exclusively on Macs. The market for video games on the Mac was and is quite small, so they quickly moved on to providing support for first Windows, then several game consoles and the burgeoning mobile phone game market. More importantly, with Unity 3 and 4 they gradually caught up with the larger studios’ collection of proprietary tools, such as the DirectX 11 multimedia suit and PhysX, a SDK for simulating physics used by many major games today.

Simultaneously, Unity Technologies worked to support a wider and wider array of art assets, file formats, and graphics engines used by a vast array of systems. This means that today, in spite of the fact that Windows, Macs and Linux, Android and iOS, and Nintendo's Wii all use different combinations of software and hardware, a developer can develop one game in Unity that will, in the company's words, "Author Once, Deploy Everywhere." The art assets can come from any one of dozens of existing drafting, sketching, and photo editing programs. When using Unity, a game developer can use related programs they already know, buy the remaining tools they need at a low cost, and increase their potential return by being able to develop for a wider array of systems.

This democratized approach goes beyond just the technology. Users can download and learn Unity for free, only buying a professional license when they wish to actually sell a game themselves. The pricing itself is also tiered, with discounts for education and non-profit groups, and also a lower cost for companies making less than $100,000. With the latest release, many of the new functions in Unity 5 are intended to let users simulate architectural designs or create virtual art installations, expanding the potential user base and audience for experiences, especially for small groups.

Unity is now a behemoth in the field of video game and virtual experience development, utilized by huge companies and one-person startups. Because of this, Unity is well situated to be a catalyst for the next steps in participatory experiences.

VR Then & Now (and then again...)

Virtual Reality is not new. Whether you believe the first references to virtual reality are in science fiction, or the early computer interfaces espoused in Douglas Engelbart's "The Mother of all Demos," or even in Antonin Artauds The Theater and its Double, the concept of an illusory yet actionable environment has been explored for the better part of the century. As a commercial entertainment possibility, VR has been perpetually on the cusp of taking off. Early efforts at 3D headsets and gestural controls with haptic feedback go back to the 1950s, with experiments that included complex rigging of computer monitors to users’ shoulders, or installations that use four walls and an interwoven group of projectors. Since then VR has existed in a curious middle ground between video games for a broader audience, and simulations for specific applications like military or flight training.

Left: Brenda Laurel's keynote. Right: "Menagerie" - a Virtual Environment installation ©Telepresence Research, Inc., 1993"

Brenda Laurel's Computers as Theatre is a most useful snapshot of both the scholarship related to VR as performance during its last hey-day in the 1990s, and to the perpetual "on-the-cusp-ness" of VR as a broadly utilized technology and medium of performance. In 1991 Laurel described the potential for a "dionysian" experience in virtual reality, but just two years later in a new chapter of the book she grapples with the fading enthusiasm for VR in the video game market, one that "over-promised and under-delivered." VR returned to the highly siloed projects of being used by a single experience or installation, on a case-by-case basis, rather than as the new way of interacting with digital media in games, performance, design etc. Much like 3-D films, VR had come and gone and come again as a way to spice up the use of digial media, but ultimately saw consistent development only in highly specialized areas. Military applications, first as training tools and later as medical treatment for PTSD, remain one of the few sectors to continually use and innovate in VR. NASA has used VR to help develop remote operation of rovers on Mars, and to enhance training programs. In the Arts individual performance groups, usually partnered with and supported by heavily funded media labs, continue to experiment with the immersive potential of a VR experience. The size of their budgets no doubt helped keep these efforts alive.

Brenda Laurel's Computers as Theatre is a most useful snapshot of both the scholarship related to VR as performance during its last hey-day in the 1990s, and to the perpetual "on-the-cusp-ness" of VR as a broadly utilized technology and medium of performance. In 1991 Laurel described the potential for a "dionysian" experience in virtual reality, but just two years later in a new chapter of the book she grapples with the fading enthusiasm for VR in the video game market, one that "over-promised and under-delivered." VR returned to the highly siloed projects of being used by a single experience or installation, on a case-by-case basis, rather than as the new way of interacting with digital media in games, performance, design, etc. Much like 3D films, VR had come and gone and come again as a way to spice up the use of digital media, but saw consistent development only in highly specialized areas. Military applications, first as training tools and later as medical treatment for PTSD, remain one of the few sectors to continually use and innovate in VR. NASA has used VR to help develop remote operation of rovers on Mars, and to enhance training programs. In the arts, individual performance groups, usually partnering with and supported by heavily funded media labs, continue to experiment with the immersive potential of a VR experience. The size of their budgets no doubt helped keep these efforts alive.

VR is again on the cusp. But there is reason for renewed enthusiasm. Current efforts in VR, fueled in part by the success of motion controllers like the Nintendo Wii and Microsoft's Kinect, have taken advantage of four important factors to once again attempt to bring VR into the mainstream: lower costs, higher performance, portability, and ubiquity. These first two are not surprising: computers are much more powerful than they were 20 years ago, and the cost of components has dropped significantly. Graphics are greatly improved, teasing again the potential for a "photo-realistic" or at least "photo-inspired" experience. Two of the leading products taking advantage of these improvements are the Oculus Rift, recently acquired by Facebook for two billion dollars, and the HTC Vive, a partnership between HTC and the Valve Corporation. Valve are the creators of Steam, the hugely dominant digital distribution platform for most games played on a PC today, leaving them poised to deploy the Vive to a large preexisting audience. Backed by these behemoth corporations, the headsets makers have driven the cost down to hundreds instead of thousands of dollars. The audience for these devices are still a subset of the gaming population, those enthusiasts with the disposable income to pay for the cutting edge. But this audience is far larger than it was in the past, and the video game industry is now larger and more profitable than Hollywood.

A (highly idealized) depiction of using the Vive, running Steam VR.

But even with these factors the field could repeat the back-slide seen in the 1990s. Even with the relatively lower cost, the Oculus Rift and the HTC Vive are still beyond the budget of the average consumer, and the games designed for these systems are heavily weighted toward the "hard-core" audience of gamer: space simulators, shooters and other war games. Even if one can afford the device, it must also be connected to and powered by expensive desktop computers, again, something that a small subset of the market already possess.

More important than higher performance or lower costs in these examples is the "portability" and the "ubiquity" factor, in the example of Google's Cardboard. As the name implies, Cardboard consists of extremely cheap cardboard headsets that allow the user to slide their smart phone into the front, and is powered by an app on their phone. The Cardboard app allows users to play games, explore environments, and build on these systems. Cardboard also uses the camera on the phone to allow for augmented reality experiences, mixing real physical environments with virtual elements. Cardboard is a low-cost and bare-bones answer to Brenda Laurels' critique of the over-promised and under-delivered VR market, one that says you have nothing to lose but $15 worth of cardboard and a few minutes of your time.

Create a [Unity] Virtual Reality Game in Seven Minutes (Google Cardboard)

Last is "ubiquity," and this is where Unity 3D is the key factor. The Unity game engine can be used to develop projects for all of the systems discussed so far. Thanks to more than a decade and a half of development, Unity has the technologies, extensibility, and most importantly the existing developers to be positioned for a variety of VR experiences. Combined with low-cost implementations like Google's Cardboard, it is now possible for a much larger audience to not only engage with VR, but to create it. This is why I believe Unity will play a key role in Virtual and Augmented Reality experiences in general, and participatory performance experiences in particular.

Applications: Unity in the Proscenium Arch

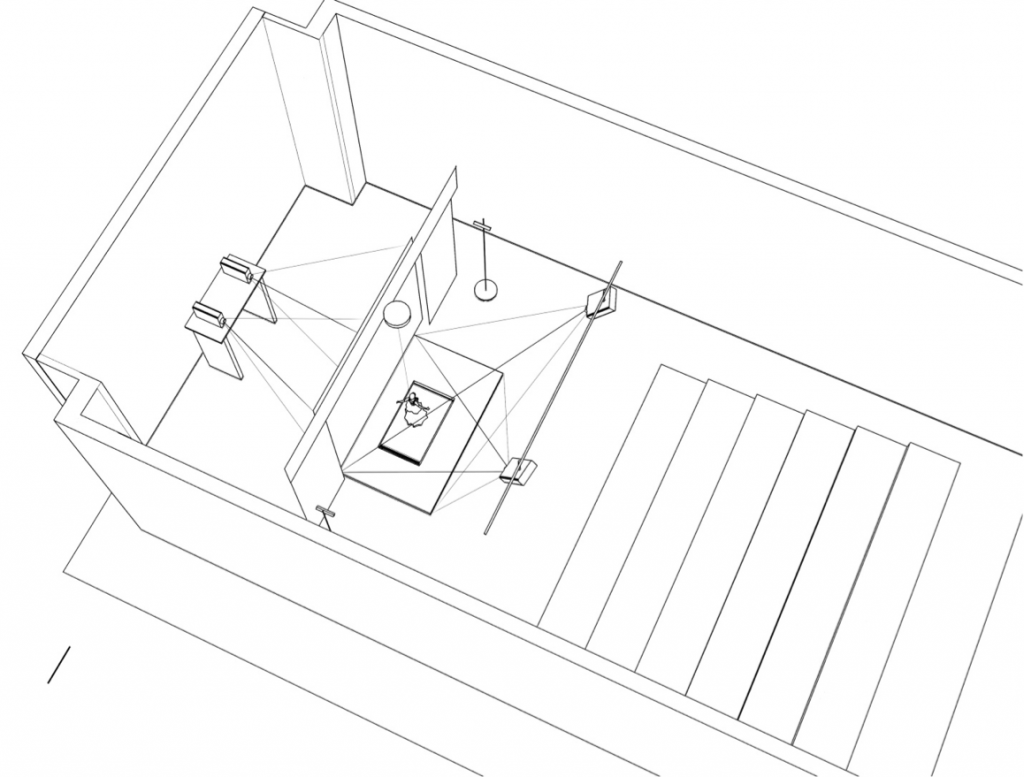

Unity's uses are not limited to groups at the edge, doing site specific work, or avant-garde interpretations of what performance can be. It's being used in the creation of live performance placed on a stage. In 2015, the University of Wisconsin–Madison's department of Theatre & Drama partnered with the Design Studies department to create the ALICE (Augmented Live Interactively Controlled Environment) project. The project's goals:

The project aims to accomplish this by enabling the performers (actor, dancer, musician, etc.) to interact with their stage environment in a dynamic and unique way. By integrating video projection, entertainment automation, motion capture, and virtual reality technologies together, the project enables new possibilities in live performance and enhance the audience’s experience.

Ultimately, ALICE is a proof-of-concept utilizing several of the systems I've discussed here. In a short one-person play, ALICE conveys several parts of the Alice in Wonderland story. A single actor is attached to a rigging system, allowing her to fly as needed. The stage upon which she's walking contains a treadmill. Behind her, the scrim displays an image from two projectors, overlapped to create a 3d effect.

The ALICE Project (Augmented Live Interactively Controlled Environment) is an interdisciplinary research project which melds existing technologies to pioneer a new live performance methodology. The project aims to accomplish this by enabling the performers (actor, dancer, musician, etc.) to interact with their stage environment in a dynamic and unique way.

The ALICE Project is an interdisciplinary research project which melds existing technologies to pioneer a new live performance methodology. The project aims to accomplish this by enabling the performers (actor, dancer, musician, etc.) to interact with their stage environment in a dynamic and unique way.

None of the hardware is new theatrical technology, but here it is brought together in new ways and with some new and cleverly repurposed tools from game design. The actor's body is continually scanned by two Microsoft Kinects, motion capture devices typically used with an Xbox video game console but here jury-rigged for a new purpose. Microsoft is notably lax about these kinds of repurposing projects, tacitly encouraging their use in other mediums. The one in front captures all the movements and body positions of the actor, while the one on the side of the stage allows for tracking depth as well as verifying body position from another angle. The treadmill allows the actor to walk forward, while the environment projected behind her moves in time. This environment, with locations from Alice in Wonderland, is created in Unity. In addition to landscapes, objects such as tea cups and bubbles appear and can move in response to the actor's motions.

The critical innovation, when all of these items are used together, is that it permits the actor to move in concert with the digital medium without the concurrent interjection of another human being. While a similar effect could be created with a stagehand handling the fly rigging, and a lighting op managing the projections, here the system allows for the actor to be in control, and removes a gap in response time. This allows flexibility and limited improvisation in the actor’s performance. Because the Unity engine can differentiate where the actor is in the environment, it can respond to the motions of the actor differently depending on the context. This means that it knows that when the actor, playing Alice, raises her arms above her head in the green field she is just gesticulating, but when she does it while falling down the rabbit hole, the rigging system responds by flying her up and down in response, and if she's next to a table it knows that this is the moment to move a teacup to her head. All of this is monitored by an additional system for safety, but the implications are clear. The project is not only responsive, it is situationally aware. In this sense, it is more "live," or at least more immediate, than if it were just a projection.

As the creators note, this is a proof-of-concept, but there are several important takeaways from this project for the future role of VR and AR in theatrical performance. The first is that these systems allow for more of the "live" in performance--it is not replicating the intermedial mixing of, say, a television on stage, playing the same video again and again. Second, the avenues for interaction are limited only by the amount of development put into the systems. One could easily imagine audiences having some control over the environment and objects in that environment, to which the actor could also respond, a sort of asynchronous participation. Part of what is supposedly unique to live theatre is the impact audience has on production and performance. The potential use of Unity software and Kinect hardware makes that impact extensible.

Applications: Current and Speculative Evolutions

Much of my recent research focused on participatory performance in public spaces, notably in the work of groups like Blast Theory and Rimini Protokoll. Both groups have investigated using audio to reshape an existing landscape, a kind of auditory augmented reality. Blast Theory's work Rider Spoke saw participants guided around an urban environment using small touch-screen devices (prior to the iPhone-prompted smartphone explosion) that used wifi signals to localize voice recordings participants made as they played a sort of hide-and-seek. Rimini Protokoll's Remote X guided participants through a series of vignettes across various cities, utilizing headsets with instructions from an artificial voice that arranged scenes and directed them on and off public transit. At the heart of both of these performances was the idea that more was at work in an urban setting than might have met the eye.

Yup, that's me! via Rimini Protokoll's Remote X

It is not hard to imagine an evolution of these productions using a device like Google's Cardboard. In addition to the possibilities of using the visual alongside the auditory, the low-cost of a Cardboard device and the accessibility of Unity means that the cost could be significantly lowered for the producer. Blast Theory is supported by significant financial backing and the Mixed Reality Labs, while Rimini Protokoll's Remote X requires a human attendant and some localized transmission equipment to ensure proper execution. Both of these could be either reduced or even eliminated by employing a combination of Cardboard and Unity.

I suspect that neither of these groups will do so, as they already have sophisticated apparatuses for their productions. But a comparison is useful. Large video game production studios continue to create their own tools for making games, turning to Unity for only some of their work. However, small indie developers have taken to Unity in a fury, vastly expanding the amount of content to explore. Similarly, the current wave of low-cost hardware built with familiar software tools makes it more likely we'll see new examples of participatory performance, similar to the work of Rimini Protokoll and Blast Theory, built using these tools.

I believe Rimini Protokoll is a particularly important model for future forms. The group’s early influences and current efforts are clearly connected to the concept of verbatim theatre, or documentary theatre. Using real locations or the speech of "real" (definition left up to the reader of course) people to tell their story, these works have a strong ideological stance as well. To look for future potential, I suspect examples out of journalism are prescient. Nonny de la Peña's 2013 work Hunger in Los Angeles makes use of a simple VR experience, built in Unity 3D, to explore a real event:

The situation transpired at the First Unitarian Church on 6th Street in Los Angeles, when the woman running a food bank line is overwhelmed. “There are too many people,” she pants. And then, much louder, shouting in frustration, “There are too many people!” Only minutes later a man falls to the ground in a diabetic coma. The line is so long that his blood sugar has dropped dangerously low while waiting for food....While it is a story well worth reporting, coverage about the issue in the newspaper, on TV or on the web barely seem to resonate. In response, HUNGER IN LOS ANGELES, puts the public on the scene at the First Unitarian Church and makes them an eyewitness to the unfolding drama by providing a powerful embodied experience. By coupling the latest virtual reality goggles with compelling audio, which tricks the mind into feeling like one is actually there, HUNGER provides unprecedented access to the sights, sounds, feelings and emotions that accompany such a terrifying event. In fact, when this revolutionary brand of journalism was showcased at the 2012 Sundance Film Festival, the strong feelings of being present made audience members try to touch non-existent characters and many cried at the conclusion of the piece.

de la Peña created Hunger in Los Angeles without prior experience with Unity or with VR, and has since become an influential artist and speaker about the genre and about the issues her work addresses. The work is both an impressive first outing into a VR installation, and a testament to the potential of using Unity for performance and advocacy.

Left: Hunger in Los Angeles - An Immersive Journalims Premiere

Right: Google Cardboard Jump

The impact of this particular piece is powerful enough in the warehouse space that housed it, but it is easy to imagine an even more pointed recreation of events using the actual location as the setting. A device like Google's Cardboard, particularly using their new Jump ring to produce 360° of content, could use the GPS in the user’s phone to mark the location, and the app itself could fill in the rest using found audio, images, and text. The depth of a documentary-style recreation, sublimation, or improvisation is very exciting.

"An Empathy Machine." A conversation with actor and director Kevin Spacey about VR, among other things.

Conclusions

It is by no means certain that Unity will be the Swiss Army knife of Virtual or Augmented Reality experiences and performances. But Unity is currently the biggest kid on the block, and more importantly is a software manifestation of the methods and rhetorical conceits inherent in the construction of VR and AR. These conceits influence the creation of new works, and often prescribe the kinds of interaction that audiences and participants will experience in these productions. Learning the tool is a way of learning one language, the literal and metaphorical code behind what I believe will be the most significant kind of performance work in the next several decades.

![By Unity 3D (http://iosgaming.wikia.com/wiki/Unity_3D_Engine) [CC BY-SA 3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons](https://images.squarespace-cdn.com/content/v1/503cf4b9e4b043c74f73666b/1462990131472-XS0SW1ME5L7XNA28DKUL/Unity3D_Logo.jpg)